With AI-first and AI-now calls persevering with to develop all through all sorts of organizations, the danger of moral, authorized and reputational injury is plain, however so, too, could also be the advantages of utilizing this quickly advancing expertise. To assist reply the query of how a lot AI is an excessive amount of, Ask an Ethicist columnist Vera Cherepanova invitations visitor ethicist/technologist Garrett Pendergraft of Pepperdine College.

Our CEO is pushing us to combine AI throughout each operate, instantly. I help the purpose, however I’m involved that dashing implementation with out clear oversight may create moral, authorized or reputational dangers. I’m not towards utilizing AI, however I’d wish to make the case for a extra deliberate rollout, one which aligns innovation with accountability. How can I do this successfully with out showing resistant to vary? — Brandon

This month, I’m joined by visitor ethicist Garrett Pendergraft to replicate on a well-recognized pressure in at this time’s company world: the push to undertake AI instantly, and the extra refined and fewer comfy query of whether or not the group is definitely prepared for it. In different phrases, is asking for prudence the identical as resisting innovation? And the way can leaders advocate for governance with out being solid as obstacles to progress?

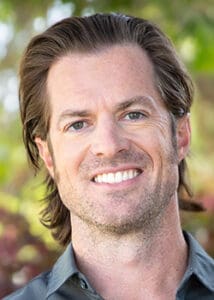

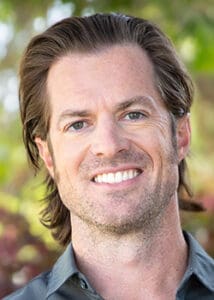

Garrett is a professor of philosophy at Pepperdine College and has a distinctive perspective as somebody who bridges two worlds — expertise and ethical philosophy — in his background. His is the perfect lens for this explicit query.

***

Use the instruments, not an excessive amount of, keep in cost

As Vera talked about, a few years in the past I studied pc science. It’s been some time since I’ve been in that world, however I’ve loved staying abreast of it. And Pepperdine used to have a joint diploma in pc science and philosophy, which was nice to be part of.

Most of what I’m going to share has been formulated extra on the particular person stage for my enterprise ethics college students and another programs I’ve executed, however I believe the rules apply in additional basic contexts, together with company settings and govt and governance contexts.

There’s typically resistance to adoption of AI instruments and expertise usually. Within the humanities, for instance, there’s quite a lot of resistance to those types of issues. And, after all, with AI instruments and LLMs and the way in which that college students are utilizing them, that feeds the resistance.

A slogan I got here up with was impressed by Michael Pollan’s meals guidelines from about 15 years in the past, one thing like, “Eat meals, not an excessive amount of, largely crops.” That construction appeared to suit properly with my ideas on utilizing AI: “Use the instruments, not an excessive amount of, keep in cost.”

With the primary half, “use the instruments,” I believe the secret is to use them properly. And in relation to larger training, the temptation is to faux that they don’t exist or attempt to arrange a cloister the place they’re not allowed.

For teachers, utilizing the instruments properly entails embracing the probabilities and taking the time to determine how what we do may be enhanced by what these instruments have to supply. So, I believe that basic precept applies throughout the board. And I believe the temptation in different fields, and within the tech discipline specifically, is to hurry headlong and attempt to use the whole lot.

This is applicable possibly extra to the “not an excessive amount of” level, however each week there’s one other information story about somebody who has been reckless with AI instruments. A latest one was Deloitte Australia, which created a report for the federal government, being paid someplace round $400,000 for it, and needed to admit the report contained quite a lot of fabricated citations and different clearly AI-generated materials. And in order that was embarrassing they usually needed to refund the federal government and redo the report.

The important thing in relation to issues like human judgment is to view even agentic instruments as like having a military of interns at your disposal. You wouldn’t simply have your interns do the be just right for you after which ship it off with out checking it first.

However this very factor is going on, whether or not within the Deloitte Australia instance, or in circumstances of briefs filed in numerous courtroom jurisdictions that embrace hallucinated citations and different fabrications, or the summer season studying listing printed by a newspaper, full with pretend books, that seems to have been AI generated.

Generally friction is the purpose

The primary level on the ethical aspect of issues is simply not forgetting about your tasks to ship the work that you simply signed as much as do. I believe on the sensible aspect, it’s tempting to imagine that if we begin utilizing these instruments, they are going to simply make the standard higher or make us sooner at producing that high quality.

However in response to one latest research, superior engineers who used these instruments took even longer to do the duty than with out them. Whereas time financial savings could also be attainable, regardless of the trade, step one is to determine your baseline high quality and a baseline period of time you anticipate a process to take. And you then systematically consider what these instruments are doing for you, whether or not they really are enhancing high quality or making you quicker.

In the event that they don’t enhance high quality or save time, it’s essential to resist the temptation to make use of them, despite the fact that they’re admittedly enjoyable to make use of. And that’s the place I believe the “not an excessive amount of” a part of my recommendation is beneficial. Human judgment is essential as a result of human judgment means determining which parts of your work life, social life and household life have to have that human nuance and which ones may be outsourced.

Generally refraining from AI use is critical as a result of you have to simply give the work a human contact. And different occasions refraining is critical as a result of there’s a specific amount of friction that truly produces studying and development.

One thing we’re at all times attempting to evangelise to our college students, borrowed from the Marine Corps., is: “Embrace the suck.” I believe that is helpful recommendation as a result of all of us are partly the place we’re at this time as a result of we went via some friction and a few battle and we embraced it to at least one extent or one other.

Once more, that is the place human judgment is critical. It’s necessary to acknowledge what sorts of friction are important to development in ability and in capability and what sorts of friction are holding us again and due to this fact may be allotted with.

There are issues that AI lets you do that you simply couldn’t do earlier than, which implies that your company has been prolonged or enhanced, however your human judgment is critical to make the dedication of whether or not your company is being enhanced to diminished.

It’s higher to make use of AI properly than to disregard it, however I’d argue that not utilizing it in any respect is healthier than utilizing it poorly.

Bear in mind what you’re attempting to do within the first place

Going again to Brandon’s query, I believe resistance to using automation and AI typically comes from a concern that we received’t have the ability to keep in cost, and I believe one of many defining traits of the frenzy to automation is failing to remain in cost. (That is how we find yourself with fabricated studying lists and hallucinated citations.) We will keep away from the knee-jerk resistance and in addition the reckless rush by desirous about easy methods to keep in cost.

Examples of the frenzy to automation embrace corporations like Coinbase or IgniteTech, which required engineers to undertake AI instruments and threatened to fireplace them in the event that they didn’t do it shortly sufficient.

I believe the difficulty right here is simply the identical subject from that basic administration consulting article from the Nineteen Seventies, “On the Folly of Rewarding A Whereas Hoping for B.”

The article provides examples of this in politics, enterprise, warfare and training. And I believe the frenzy to automation is one other instance of this. If you would like your staff to be extra productive, and also you’re satisfied that they will profit from leveraging AI instruments, then you must simply ask for extra or higher output. If you happen to merely require use of AI instruments — specializing in the means quite than the specified finish outcome — then individuals received’t essentially be utilizing the instruments for the appropriate causes.

And if individuals aren’t utilizing AI instruments for the appropriate causes, they could find yourself producing the alternative impact and being much less productive. It’s just like the cobra impact, throughout British colonial rule of India, the place they tried to unravel the issue of too many cobras by providing a bounty on useless cobras. So individuals simply bred cobras and killed them and turned them in for the bounty, with the tip results of extra cobras general.

A rush to automation, particularly if it features a simplistic requirement to make use of AI instruments, can do extra hurt than good.

We should always give attention to what we’re really attempting to do on the market on the earth or in our trade and check out to determine precisely how the instruments may also help with that. I believe the underside line is that the important thing ingredient that’s at all times going to be invaluable, the irreplaceable half is at all times going to be human judgment. These instruments will change into extra highly effective, extra agentic, extra able to working collectively and making choices. The extra complicated it will get, the extra we’re going to have to concentrate on determination factors, inputs and outputs and the way human judgment can guarantee we don’t find yourself within the apocalypse eventualities and even simply the pricey or embarrassing eventualities for our enterprise.

As an alternative, we need to take advantage of these new capacities for the great of our enterprise and the general good of everybody.

***

Garrett’s perspective is so wealthy and so highly effective. And we’ve definitely seen the “Rewarding A Whereas Hoping for B” film earlier than. Bear in mind Wells Fargo’s try to watch distant worker productiveness by monitoring keystrokes and display time? Measure keystrokes, get a number of keystrokes, because the saying goes.

The lesson applies on to AI. Inconsiderate adoption can create extra threat, not much less. Human judgment stays an irreplaceable asset. So, Brandon, if you communicate together with your CEO, don’t body your concern as “decelerate.” Body it as:

- Let’s purpose for higher outcomes, not simply extra AI.

- Let’s pilot, measure and be taught, not blindly deploy.

- And let’s maintain people in cost of the choices that matter.

Use the instruments, not an excessive amount of, keep in cost. That’s not resistance to vary. It’s the way you ensure AI turns into a power multiplier to your firm as a substitute of an costly shortcut to synthetic stupidity.

Readers reply

Final month’s query got here from a director serving on the board of a listed firm. An worker’s private put up a few polarizing political occasion went viral, dragging the corporate into the headlines. Terminating the worker seemed like the straightforward reply, however the dilemma raised deeper questions: When free speech, firm status and political stress collide, the place does the board’s fiduciary obligation lie?

In my response, I famous: “The phrase ‘That is what we stand for’ will get repeated quite a bit in moments like this. But in conditions like this, few corporations pause to ask whether or not they really do stand for the issues they are saying they do. Do your values genuinely inform your choices, or do they floor solely when handy? When termination is pushed extra by exterior stress than inside precept, it’s not good governance for the sake of the corporate’s long-term well being. It’s managing the headlines.

“Consistency issues, and so does proportionality and due course of: info verified, context understood. Boards’ capability to show that they supplied knowledgeable oversight and decision-making is tied up within the integrity of the method. The reputational injury from inconsistency and hypocrisy may be far larger than from a single worker’s poorly worded put up.

“One other complication is that just about something may be seen as politically incendiary at this time, which was not the case prior to now. The temptation for fast motion to ‘get forward of the story’ is usually political opportunism in disguise. Boards beneath public scrutiny might persuade themselves they’re defending values, after they’re actually simply hedging towards private legal responsibility.” Learn the complete query and reply right here.

“He who rises in anger, suffers losses” — YA

Have a response? Share your suggestions on what I bought proper (or mistaken). Ship me your feedback or questions.

With AI-first and AI-now calls persevering with to develop all through all sorts of organizations, the danger of moral, authorized and reputational injury is plain, however so, too, could also be the advantages of utilizing this quickly advancing expertise. To assist reply the query of how a lot AI is an excessive amount of, Ask an Ethicist columnist Vera Cherepanova invitations visitor ethicist/technologist Garrett Pendergraft of Pepperdine College.

Our CEO is pushing us to combine AI throughout each operate, instantly. I help the purpose, however I’m involved that dashing implementation with out clear oversight may create moral, authorized or reputational dangers. I’m not towards utilizing AI, however I’d wish to make the case for a extra deliberate rollout, one which aligns innovation with accountability. How can I do this successfully with out showing resistant to vary? — Brandon

This month, I’m joined by visitor ethicist Garrett Pendergraft to replicate on a well-recognized pressure in at this time’s company world: the push to undertake AI instantly, and the extra refined and fewer comfy query of whether or not the group is definitely prepared for it. In different phrases, is asking for prudence the identical as resisting innovation? And the way can leaders advocate for governance with out being solid as obstacles to progress?

Garrett is a professor of philosophy at Pepperdine College and has a distinctive perspective as somebody who bridges two worlds — expertise and ethical philosophy — in his background. His is the perfect lens for this explicit query.

***

Use the instruments, not an excessive amount of, keep in cost

As Vera talked about, a few years in the past I studied pc science. It’s been some time since I’ve been in that world, however I’ve loved staying abreast of it. And Pepperdine used to have a joint diploma in pc science and philosophy, which was nice to be part of.

Most of what I’m going to share has been formulated extra on the particular person stage for my enterprise ethics college students and another programs I’ve executed, however I believe the rules apply in additional basic contexts, together with company settings and govt and governance contexts.

There’s typically resistance to adoption of AI instruments and expertise usually. Within the humanities, for instance, there’s quite a lot of resistance to those types of issues. And, after all, with AI instruments and LLMs and the way in which that college students are utilizing them, that feeds the resistance.

A slogan I got here up with was impressed by Michael Pollan’s meals guidelines from about 15 years in the past, one thing like, “Eat meals, not an excessive amount of, largely crops.” That construction appeared to suit properly with my ideas on utilizing AI: “Use the instruments, not an excessive amount of, keep in cost.”

With the primary half, “use the instruments,” I believe the secret is to use them properly. And in relation to larger training, the temptation is to faux that they don’t exist or attempt to arrange a cloister the place they’re not allowed.

For teachers, utilizing the instruments properly entails embracing the probabilities and taking the time to determine how what we do may be enhanced by what these instruments have to supply. So, I believe that basic precept applies throughout the board. And I believe the temptation in different fields, and within the tech discipline specifically, is to hurry headlong and attempt to use the whole lot.

This is applicable possibly extra to the “not an excessive amount of” level, however each week there’s one other information story about somebody who has been reckless with AI instruments. A latest one was Deloitte Australia, which created a report for the federal government, being paid someplace round $400,000 for it, and needed to admit the report contained quite a lot of fabricated citations and different clearly AI-generated materials. And in order that was embarrassing they usually needed to refund the federal government and redo the report.

The important thing in relation to issues like human judgment is to view even agentic instruments as like having a military of interns at your disposal. You wouldn’t simply have your interns do the be just right for you after which ship it off with out checking it first.

However this very factor is going on, whether or not within the Deloitte Australia instance, or in circumstances of briefs filed in numerous courtroom jurisdictions that embrace hallucinated citations and different fabrications, or the summer season studying listing printed by a newspaper, full with pretend books, that seems to have been AI generated.

Generally friction is the purpose

The primary level on the ethical aspect of issues is simply not forgetting about your tasks to ship the work that you simply signed as much as do. I believe on the sensible aspect, it’s tempting to imagine that if we begin utilizing these instruments, they are going to simply make the standard higher or make us sooner at producing that high quality.

However in response to one latest research, superior engineers who used these instruments took even longer to do the duty than with out them. Whereas time financial savings could also be attainable, regardless of the trade, step one is to determine your baseline high quality and a baseline period of time you anticipate a process to take. And you then systematically consider what these instruments are doing for you, whether or not they really are enhancing high quality or making you quicker.

In the event that they don’t enhance high quality or save time, it’s essential to resist the temptation to make use of them, despite the fact that they’re admittedly enjoyable to make use of. And that’s the place I believe the “not an excessive amount of” a part of my recommendation is beneficial. Human judgment is essential as a result of human judgment means determining which parts of your work life, social life and household life have to have that human nuance and which ones may be outsourced.

Generally refraining from AI use is critical as a result of you have to simply give the work a human contact. And different occasions refraining is critical as a result of there’s a specific amount of friction that truly produces studying and development.

One thing we’re at all times attempting to evangelise to our college students, borrowed from the Marine Corps., is: “Embrace the suck.” I believe that is helpful recommendation as a result of all of us are partly the place we’re at this time as a result of we went via some friction and a few battle and we embraced it to at least one extent or one other.

Once more, that is the place human judgment is critical. It’s necessary to acknowledge what sorts of friction are important to development in ability and in capability and what sorts of friction are holding us again and due to this fact may be allotted with.

There are issues that AI lets you do that you simply couldn’t do earlier than, which implies that your company has been prolonged or enhanced, however your human judgment is critical to make the dedication of whether or not your company is being enhanced to diminished.

It’s higher to make use of AI properly than to disregard it, however I’d argue that not utilizing it in any respect is healthier than utilizing it poorly.

Bear in mind what you’re attempting to do within the first place

Going again to Brandon’s query, I believe resistance to using automation and AI typically comes from a concern that we received’t have the ability to keep in cost, and I believe one of many defining traits of the frenzy to automation is failing to remain in cost. (That is how we find yourself with fabricated studying lists and hallucinated citations.) We will keep away from the knee-jerk resistance and in addition the reckless rush by desirous about easy methods to keep in cost.

Examples of the frenzy to automation embrace corporations like Coinbase or IgniteTech, which required engineers to undertake AI instruments and threatened to fireplace them in the event that they didn’t do it shortly sufficient.

I believe the difficulty right here is simply the identical subject from that basic administration consulting article from the Nineteen Seventies, “On the Folly of Rewarding A Whereas Hoping for B.”

The article provides examples of this in politics, enterprise, warfare and training. And I believe the frenzy to automation is one other instance of this. If you would like your staff to be extra productive, and also you’re satisfied that they will profit from leveraging AI instruments, then you must simply ask for extra or higher output. If you happen to merely require use of AI instruments — specializing in the means quite than the specified finish outcome — then individuals received’t essentially be utilizing the instruments for the appropriate causes.

And if individuals aren’t utilizing AI instruments for the appropriate causes, they could find yourself producing the alternative impact and being much less productive. It’s just like the cobra impact, throughout British colonial rule of India, the place they tried to unravel the issue of too many cobras by providing a bounty on useless cobras. So individuals simply bred cobras and killed them and turned them in for the bounty, with the tip results of extra cobras general.

A rush to automation, particularly if it features a simplistic requirement to make use of AI instruments, can do extra hurt than good.

We should always give attention to what we’re really attempting to do on the market on the earth or in our trade and check out to determine precisely how the instruments may also help with that. I believe the underside line is that the important thing ingredient that’s at all times going to be invaluable, the irreplaceable half is at all times going to be human judgment. These instruments will change into extra highly effective, extra agentic, extra able to working collectively and making choices. The extra complicated it will get, the extra we’re going to have to concentrate on determination factors, inputs and outputs and the way human judgment can guarantee we don’t find yourself within the apocalypse eventualities and even simply the pricey or embarrassing eventualities for our enterprise.

As an alternative, we need to take advantage of these new capacities for the great of our enterprise and the general good of everybody.

***

Garrett’s perspective is so wealthy and so highly effective. And we’ve definitely seen the “Rewarding A Whereas Hoping for B” film earlier than. Bear in mind Wells Fargo’s try to watch distant worker productiveness by monitoring keystrokes and display time? Measure keystrokes, get a number of keystrokes, because the saying goes.

The lesson applies on to AI. Inconsiderate adoption can create extra threat, not much less. Human judgment stays an irreplaceable asset. So, Brandon, if you communicate together with your CEO, don’t body your concern as “decelerate.” Body it as:

- Let’s purpose for higher outcomes, not simply extra AI.

- Let’s pilot, measure and be taught, not blindly deploy.

- And let’s maintain people in cost of the choices that matter.

Use the instruments, not an excessive amount of, keep in cost. That’s not resistance to vary. It’s the way you ensure AI turns into a power multiplier to your firm as a substitute of an costly shortcut to synthetic stupidity.

Readers reply

Final month’s query got here from a director serving on the board of a listed firm. An worker’s private put up a few polarizing political occasion went viral, dragging the corporate into the headlines. Terminating the worker seemed like the straightforward reply, however the dilemma raised deeper questions: When free speech, firm status and political stress collide, the place does the board’s fiduciary obligation lie?

In my response, I famous: “The phrase ‘That is what we stand for’ will get repeated quite a bit in moments like this. But in conditions like this, few corporations pause to ask whether or not they really do stand for the issues they are saying they do. Do your values genuinely inform your choices, or do they floor solely when handy? When termination is pushed extra by exterior stress than inside precept, it’s not good governance for the sake of the corporate’s long-term well being. It’s managing the headlines.

“Consistency issues, and so does proportionality and due course of: info verified, context understood. Boards’ capability to show that they supplied knowledgeable oversight and decision-making is tied up within the integrity of the method. The reputational injury from inconsistency and hypocrisy may be far larger than from a single worker’s poorly worded put up.

“One other complication is that just about something may be seen as politically incendiary at this time, which was not the case prior to now. The temptation for fast motion to ‘get forward of the story’ is usually political opportunism in disguise. Boards beneath public scrutiny might persuade themselves they’re defending values, after they’re actually simply hedging towards private legal responsibility.” Learn the complete query and reply right here.

“He who rises in anger, suffers losses” — YA