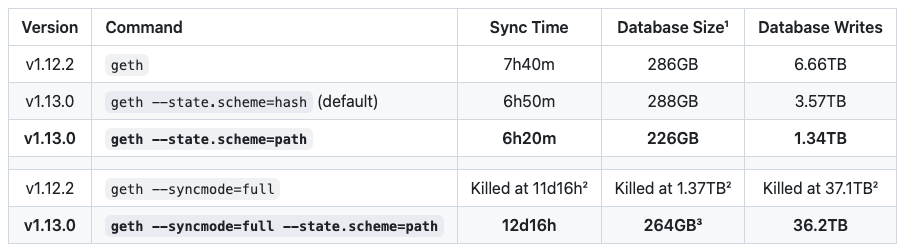

Geth v1.13 comes pretty shut on the heels of the 1.12 launch household, which is funky, contemplating it is principal function has been in improvement for a cool 6 years now. 🤯

This put up will go into quite a few technical and historic particulars, however for those who simply need the gist of it, Geth v1.13.0 ships a brand new database mannequin for storing the Ethereum state, which is each quicker than the earlier scheme, and likewise has correct pruning applied. No extra junk accumulating on disk and no extra guerilla (offline) pruning!

- ¹Excluding ~589GB historical information, the identical throughout all configurations.

- ²Hash scheme full sync exceeded our 1.8TB SSD at block ~15.43M.

- ³Size distinction vs snap sync attributed to compaction overhead.

Earlier than going forward although, a shoutout goes to Gary Rong who has been engaged on the crux of this rework for the higher a part of 2 years now! Wonderful work and wonderful endurance to get this large chunk of labor in!

Gory tech particulars

Okay, so what’s up with this new information mannequin and why was it wanted within the first place?

In brief, our previous approach of storing the Ethereum state didn’t permit us to effectively prune it. We had a wide range of hacks and tips to build up junk slower within the database, however we nonetheless stored accumulating it indefinitely. Customers might cease their node and prune it offline; or resync the state to do away with the junk. But it surely was a really non-ideal resolution.

With the intention to implement and ship actual pruning; one that doesn’t go away any junk behind, we wanted to interrupt loads of eggs inside Geth’s codebase. Effort clever, we might evaluate it to the Merge, solely restricted to Geth’s inner stage:

- Storing state trie nodes by hashes introduces an implicit deduplication (i.e. if two branches of the trie share the identical content material (extra possible for contract storages), they get saved solely as soon as). This implicit deduplication implies that we are able to by no means know what number of guardian’s (i.e. completely different trie paths, completely different contracts) reference some node; and as such, we are able to by no means know what’s protected and what’s unsafe to delete from disk.

- Any type of deduplication throughout completely different paths within the trie needed to go earlier than pruning might be applied. Our new information mannequin shops state trie nodes keyed by their path, not their hash. This slight change implies that if beforehand two branches has the identical hash and have been saved solely as soon as; now they are going to have completely different paths resulting in them, so although they’ve the identical content material, they are going to be saved individually, twice.

- Storing a number of state tries within the database introduces a special type of deduplication. For our previous information mannequin, the place we saved trie nodes keyed by hash, the overwhelming majority of trie nodes keep the identical between consecutive blocks. This ends in the identical situation, that we do not know what number of blocks reference the identical state, stopping a pruner from working successfully. Altering the info mannequin to path based mostly keys makes storing a number of tries not possible altogether: the identical path-key (e.g. empty path for the basis node) might want to retailer various things for every block.

- The second invariant we wanted to interrupt was the potential to retailer arbitrarily many states on disk. The one strategy to have efficient pruning, in addition to the one strategy to characterize trie nodes keyed by path, was to limit the database to comprise precisely 1 state trie at any time limit. Initially this trie is the genesis state, after which it must observe the chain state as the pinnacle is progressing.

- The only resolution with storing 1 state trie on disk is to make it that of the pinnacle block. Sadly, that’s overly simplistic and introduces two points. Mutating the trie on disk block-by-block entails a lot of writes. While in sync it might not be that noticeable, however importing many blocks (e.g. full sync or catchup) it turns into unwieldy. The second situation is that earlier than finality, the chain head would possibly wiggle a bit throughout mini-reorgs. They aren’t widespread, however since they can occur, Geth must deal with them gracefully. Having the persistent state locked to the pinnacle makes it very laborious to change to a special side-chain.

- The answer is analogous to how Geth’s snapshots work. The persistent state doesn’t observe the chain head, slightly it’s quite a few blocks behind. Geth will at all times preserve the trie adjustments executed within the final 128 blocks in reminiscence. If there are a number of competing branches, all of them are tracked in reminiscence in a tree form. Because the chain strikes ahead, the oldets (HEAD-128) diff layer is flattened down. This allows Geth to do blazing quick reorgs throughout the prime 128 blocks, side-chain switches primarily being free.

- The diff layers nevertheless don’t remedy the difficulty that the persistent state wants to maneuver ahead on each block (it could simply be delayed). To keep away from disk writes block-by-block, Geth additionally has a unclean cache in between the persistent state and the diff layers, which accumulates writes. The benefit is that since consecutive blocks have a tendency to vary the identical storage slots lots, and the highest of the trie is overwritten on a regular basis; the soiled buffer brief circuits these writes, which can by no means must hit disk. When the buffer will get full nevertheless, every part is flushed to disk.

- With the diff layers in place, Geth can do 128 block-deep reorgs immediately. Generally nevertheless, it may be fascinating to do a deeper reorg. Maybe the beacon chain will not be finalizing; or maybe there was a consensus bug in Geth and an improve must “undo” a bigger portion of the chain. Beforehand Geth might simply roll again to an previous state it had on disk and reprocess blocks on prime. With the brand new mannequin of getting solely ever 1 state on disk, there’s nothing to roll again to.

- Our resolution to this situation is the introduction of a notion referred to as reverse diffs. Each time a brand new block is imported, a diff is created which can be utilized to transform the post-state of the block again to it is pre-state. The final 90K of those reverse diffs are saved on disk. At any time when a really deep reorg is requested, Geth can take the persistent state on disk and begin making use of diffs on prime till the state is mutated again to some very previous model. Then is can change to a special side-chain and course of blocks on prime of that.

The above is a condensed abstract of what we wanted to change in Geth’s internals to introduce our new pruner. As you’ll be able to see, many invariants modified, a lot so, that Geth primarily operates in a very completely different approach in comparison with how the previous Geth labored. There isn’t any strategy to merely change from one mannequin to the opposite.

We after all acknowledge that we will not simply “cease working” as a result of Geth has a brand new information mannequin, so Geth v1.13.0 has two modes of operation (speak about OSS maintanance burden). Geth will maintain supporting the previous information mannequin (moreover it can keep the default for now), so your node is not going to do something “humorous” simply since you up to date Geth. You possibly can even pressure Geth to stay to the previous mode of operation long run by way of –state.scheme=hash.

For those who want to change to our new mode of operation nevertheless, you’ll need to resync the state (you’ll be able to maintain the ancients FWIW). You are able to do it manually or by way of geth removedb (when requested, delete the state database, however maintain the traditional database). Afterwards, begin Geth with –state.scheme=path. For now, the path-model will not be the default one, but when a earlier database exist already, and no state scheme is explicitly requested on the CLI, Geth will use no matter is contained in the database. Our suggestion is to at all times specify –state.scheme=path simply to be on the protected facet. If no severe points are surfaced in our path scheme implementation, Geth v1.14.x will most likely change over to it because the default format.

A pair notes to remember:

- In case you are working non-public Geth networks utilizing geth init, you’ll need to specify –state.scheme for the init step too, in any other case you’ll find yourself with an previous type database.

- For archive node operators, the brand new information mannequin will be suitable with archive nodes (and can convey the identical wonderful database sizes as Erigon or Reth), however wants a bit extra work earlier than it may be enabled.

Additionally, a phrase of warning: Geth’s new path-based storage is taken into account secure and manufacturing prepared, however was clearly not battle examined but outdoors of the group. Everyone seems to be welcome to make use of it, however when you have vital dangers in case your node crashes or goes out of consensus, you would possibly need to wait a bit to see if anybody with a decrease danger profile hits any points.

Now onto some side-effect surprises…

Semi-instant shutdowns

Head state lacking, repairing chain… 😱

…the startup log message we’re all dreading, understanding our node will likely be offline for hours… goes away!!! However earlier than saying goodbye to it, lets shortly recap what it was, why it occurred, and why it is turning into irrelevant.

Previous to Geth v1.13.0, the Merkle Patricia trie of the Ethereum state was saved on disk as a hash-to-node mapping. That means, every node within the trie was hashed, and the worth of the node (whether or not leaf or inner node) was inserted in a key-value retailer, keyed by the computed hash. This was each very elegant from a mathematical perspective, and had a cute optimization that if completely different components of the state had the identical subtrie, these would get deduplicated on disk. Cute… and deadly.

When Ethereum launched, there was solely archive mode. Each state trie of each block was persevered to disk. Easy and chic. After all, it quickly turned clear that the storage requirement of getting all of the historic state saved without end is prohibitive. Quick sync did assist. By periodically resyncing, you possibly can get a node with solely the most recent state persevered after which pile solely subsequent tries on prime. Nonetheless, the expansion charge required extra frequent resyncs than tolerable in manufacturing.

What we wanted, was a strategy to prune historic state that isn’t related anymore for working a full node. There have been quite a few proposals, even 3-5 implementations in Geth, however every had such an enormous overhead, that we have discarded them.

Geth ended up having a really complicated ref-counting in-memory pruner. As a substitute of writing new states to disk instantly, we stored them in reminiscence. Because the blocks progressed, we piled new trie nodes on prime and deleted previous ones that weren’t referenced by the final 128 blocks. As this reminiscence space obtained full, we dripped the oldest, still-referenced nodes to disk. While removed from good, this resolution was an unlimited achieve: disk progress obtained drastically minimize, and the extra reminiscence given, the higher the pruning efficiency.

The in-memory pruner nevertheless had a caveat: it solely ever persevered very previous, nonetheless dwell nodes; protecting something remotely latest in RAM. When the person needed to close Geth down, the latest tries – all stored in reminiscence – wanted to be flushed to disk. However because of the information structure of the state (hash-to-node mapping), inserting tons of of 1000’s of trie nodes into the database took many many minutes (random insertion order attributable to hash keying). If Geth was killed quicker by the person or a service monitor (systemd, docker, and so on), the state saved in reminiscence was misplaced.

On the following startup, Geth would detect that the state related to the most recent block by no means obtained persevered. The one decision is to start out rewinding the chain, till a block is discovered with all the state accessible. Because the pruner solely ever drips nodes to disk, this rewind would normally undo every part till the final profitable shutdown. Geth did sometimes flush a complete soiled trie to disk to dampen this rewind, however that also required hours of processing after a crash.

We dug ourselves a really deep gap:

- The pruner wanted as a lot reminiscence because it might to be efficient. However the extra reminiscence it had, the upper chance of a timeout on shutdown, leading to information loss and chain rewind. Giving it much less reminiscence causes extra junk to finish up on disk.

- State was saved on disk keyed by hash, so it implicitly deduplicated trie nodes. However deduplication makes it not possible to prune from disk, being prohibitively costly to make sure nothing references a node anymore throughout all tries.

- Reduplicating trie nodes might be executed through the use of a special database structure. However altering the database structure would have made quick sync inoperable, because the protocol was designed particularly to be served by this information mannequin.

- Quick sync might be changed by a special sync algorithm that doesn’t depend on the hash mapping. However dropping quick sync in favor of one other algorithm requires all purchasers to implement it first, in any other case the community splinters.

- A brand new sync algorithm, one based mostly on state snapshots, as an alternative of tries may be very efficient, but it surely requires somebody sustaining and serving the snapshots. It’s primarily a second consensus essential model of the state.

It took us fairly some time to get out of the above gap (sure, these have been the laid out steps all alongside):

- 2018: Snap sync’s preliminary designs are made, the mandatory supporting information buildings are devised.

- 2019: Geth begins producing and sustaining the snapshot acceleration buildings.

- 2020: Geth prototypes snap sync and defines the ultimate protocol specification.

- 2021: Geth ships snap sync and switches over to it from quick sync.

- 2022: Different purchasers implement consuming snap sync.

- 2023: Geth switches from hash to path keying.

- Geth turns into incapable of serving the previous quick sync.

- Geth reduplicates persevered trie nodes to allow disk pruning.

- Geth drops in-memory pruning in favor of correct persistent disk pruning.

One request to different purchasers at this level is to please implement serving snap sync, not simply consuming it. At the moment Geth is the one participant of the community that maintains the snapshot acceleration construction that every one different purchasers use to sync.

The place does this very lengthy detour land us? With Geth’s very core information illustration swapped out from hash-keys to path-keys, we might lastly drop our beloved in-memory pruner in trade for a shiny new, on-disk pruner, which at all times retains the state on disk contemporary/latest. After all, our new pruner additionally makes use of an in-memory element to make it a bit extra optimum, but it surely primarilly operates on disk, and it is effectiveness is 100%, unbiased of how a lot reminiscence it has to function in.

With the brand new disk information mannequin and reimplemented pruning mechanism, the info stored in reminiscence is sufficiently small to be flushed to disk in a number of seconds on shutdown. Besides, in case of a crash or person/process-manager insta-kill, Geth will solely ever must rewind and reexecute a pair hundred blocks to meet up with its prior state.

Say goodbye to the lengthy startup instances, Geth v1.13.0 opens courageous new world (with –state.scheme=path, thoughts you).

Drop the –cache flag

No, we did not drop the –cache flag, however likelihood is, it’s best to!

Geth’s –cache flag has a little bit of a murky previous, going from a easy (and ineffective) parameter to a really complicated beast, the place it is conduct is pretty laborious to convey and likewise to correctly account.

Again within the Frontier days, Geth did not have many parameters to tweak to attempt to make it go quicker. The one optimization we had was a reminiscence allowance for LevelDB to maintain extra of the just lately touched information in RAM. Curiously, allocating RAM to LevelDB vs. letting the OS cache disk pages in RAM will not be that completely different. The one time when explicitly assigning reminiscence to the database is useful, is when you have a number of OS processes shuffling plenty of information, thrashing one another’s OS caches.

Again then, letting customers allocate reminiscence for the database appeared like a superb shoot-in-the-dark try and make issues go a bit quicker. Turned out it was additionally a superb shoot-yourself-in-the-foot mechanism, because it turned out Go’s rubbish collector actually actually dislikes giant idle reminiscence chunks: the GC runs when it piles up as a lot junk, because it had helpful information left after the earlier run (i.e. it can double the RAM requirement). Thus started the saga of Killed and OOM crashes…

Quick-forward half a decade and the –cache flag, for higher or worse, developed:

- Relying whether or not you are on mainnet or testnet, –cache defaults to 4GB or 512MB.

- 50% of the cache allowance is allotted to the database to make use of as dumb disk cache.

- 25% of the cache allowance is allotted to in-memory pruning, 0% for archive nodes.

- 10% of the cache allowance is allotted to snapshot caching, 20% for archive nodes.

- 15% of the cache allowance is allotted to trie node caching, 30% for archive nodes.

The general dimension and every share might be individually configured by way of flags, however let’s be sincere, no one understands how to do this or what the impact will likely be. Most customers bumped the –cache up as a result of it result in much less junk accumulating over time (that 25% half), but it surely additionally result in potential OOM points.

Over the previous two years we have been engaged on a wide range of adjustments, to melt the madness:

- Geth’s default database was switched to Pebble, which makes use of caching layers outide of the Go runtime.

- Geth’s snapshot and trie node cache began utilizing fastcache, additionally allocating outdoors of the Go runtime.

- The brand new path schema prunes state on the fly, so the previous pruning allowance was reassigned to the trie cache.

The web impact of all these adjustments are, that utilizing Geth’s new path database scheme ought to lead to 100% of the cache being allotted outdoors of Go’s GC enviornment. As such, customers elevating or decreasing it should have no hostile results on how the GC works or how a lot reminiscence is utilized by the remainder of Geth.

That mentioned, the –cache flag additionally has no influece in any way any extra on pruning or database dimension, so customers who beforehand tweaked it for this goal, can drop the flag. Customers who simply set it excessive as a result of that they had the accessible RAM also needs to think about dropping the flag and seeing how Geth behaves with out it. The OS will nonetheless use any free reminiscence for disk caching, so leaving it unset (i.e. decrease) will probably lead to a extra sturdy system.

Epilogue

As with all our earlier releases, you will discover the: